Chapter 12

Simulation and Self-conversation in Computational Contexts

In the history of ideas and disputes about Artificial Intelligence (AI), one of the most frequently referenced items is a simple rules-based computer program, called DOCTOR. The script for its conversational interface with the user is written as a parody of a non-directive initial therapeutic interview with a patient. Its crude language analysis program is named "Eliza" (after the flower girl in Pygmalion) to indicate its poor command of English. Joseph Weizenbaum, who wrote the program more than two decades ago, was startled to see how quickly and deeply people conversing with the program "became emotionally involved with the computer". Even when told of the program's limitations, some people wanted to confide in it, and even asserted that they felt being understood by it.1 This came to be termed the Eliza-effect to designate the illusions that may arise during conversations with a computer, and held as a warning about how easily people could be fooled by the tricks of computer program.

However, as I shall later indicate, the user's feeling of understanding need be no illusion, even though the attribution of understanding to Eliza is misplaced.

There are computer programs in current development to which the designers attribute the capacity of understanding. For example, Roger Schank speaks of some of the computer programs, designed in the AI laboratory at Yale, as understanding systems, offering more than just making

sense to the user. According to him, a computer program, in order to understand, would have to be able to "carry on a dialogue with itself about what it already knows and what it is in the process of trying to understand.."2

What, then, is involved when one sometimes attributes to an artifact, such as a computer program, the capacity of understanding? What does it mean get emotionally involved in a conversation with a computer program? What lessons can be learned from computer simulations? Why is it that in cognitive science and simulation endeavors we tend to describe artifacts in the image of human beings, and then, sometimes come to describe human processes in the image of artifacts? In this chapter I shall turn to these issues and attempt to account for the Eliza-effect in terms of the thesis about the mind's dialogic nature.

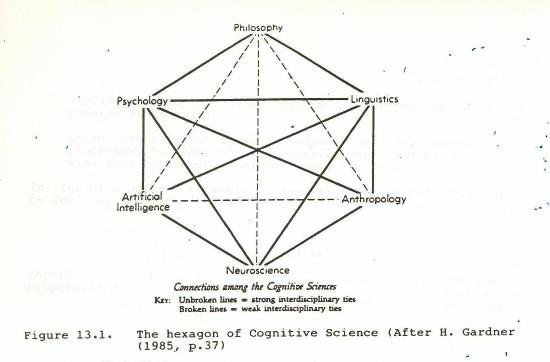

The hexagon of Cognitive Science

Focusing on the computer as symbolic computational devices, the discipline of Artificial Intelligence (AI) defines its perspective as that of physical symbolic processing. The ground is laid by Newell and Simon, who propose the hypothesis that a physical symbol system has the necessary and sufficient means for intelligent action. They consider the human brain and a symbolic Lisp machine as instances of such physical symbol systems. An identifying characteristics of the AI-culture has been the use of Lisp programming languages, supplemented by logic programming, and later, by object-oriented languages which originated in the Simula conceptions in the late 60's.3Simula Begin, Lund/Philadelphia 1973. See also:

Max Rettig: "Using Smalltalk to Implement Frames". AI Expert

2 (1) 1987, pp.15-17.

Recently there has also been a renewal of interest in neurocomputational nets that exhibit self-organizing and learning behavior. This relates to the cybernetic ground laid in the 1940's and 50s by McCulloch and others who were concerned about neurocomputation and self-organizing systems. For example, Marvin Minsky, one of the AI-pioneers responsible for breaking away from this ground, now writes about the society of mind in terms of net structures. Thus, gradually, the focus on symbolic knowledge representation has been widened and supplemented. Symbolic representation may still be seen as necessary, but not sufficient, for language understanding, reasoning, learning and problem-solving.

Failures to achieve some of the early objectives, for example with respect to natural language processing systems,

gave rise to an awareness of the need for knowledge about human processes of cognition in their social and situational contexts.

The multidisciplinary program for Cognitive Science emerges.4 Norwood, N.J. 1981.

In the state of the art report of 1978, the interdisciplinary emphasis of cognitive science is expressed in the above way by researchers who share this objective: "to discover the representational and computational capacities of the mind and their structural and functional representation in the brain."5 While there is still an identification with the engineering design concern of AI, the discovery orientation in terms of information processing and symbolic representations extends an invitation to various relevant sciences: Knowledge about mental phenomena in psychology, about grammar rules in linguistics, about analysis of knowledge in philosophy, about neurophysiological processes in the brain, and about cultural context in social anthropology.

Recently, there are even linkages to research on child development. For example, Roger Schank's concern about the nature of human memory in his computer program development is shared by Daniel Stern in infancy research.

In the quest for unifying conceptions which has been predominant in the cognitive science endeavors, there may be heuristic boomerangs involved in the attempt to describe human beings in the computational Gestalt and to describe computers in the human Gestalt. But then, while we tend to write computer programs in the monadic and monologic images of ourselves, such implemented characteristics may also come to evoke complementary processes and activate the internal dialogue in the designer or the user - not as a function of the symbolic artifact, but emerging from the nature of the user's mind. Hence, the very effort to simulate ourselves may come to raise questions about our own nature which otherwise might not have been posed.

Computer simulation models as exploratory means

When computer programs are used for the purpose of expressing and investigating theories of cognition, this is sometimes considered a matter of necessity, especially in the case of highly complex theory formulations. Some of the pathfinders in the field regard the method of computer simulation as one of the few methods that exist for investigating complex symbol- and information-processing theories of cognition.6

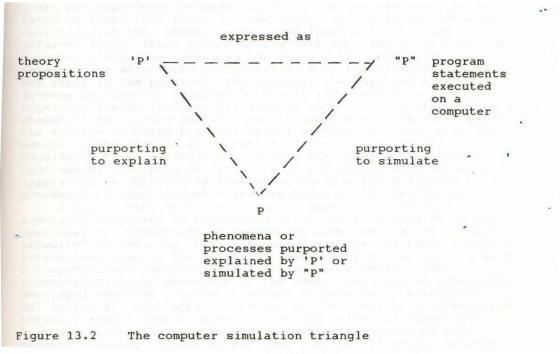

Such use of computer simulations concerns the relations between these three levels:

first, a theory level of assumptions and propositions 'P';

second, a computer operational level of program statements, "P", that partly may express 'P' and allow for theory implications to be yielded through computer execution of the program statements;

third, the referent level of phenomena or "real-world" processes, P, recorded and purported explained by 'P' or simulated by execution of the computer model, "P".

In line with Ogden's triangle in semantics, these relations may be depicted as in Fig.13.2.

The diagram may be used to distinguish strong and weak views upon the explanatory power of computer simulations. As long as only the uppermost vertical relation between 'P' and "P" is considered, computer simulations are seen to serve as means of making precise and exploring properties of theoretical assumptions, without warranting any claim about the tenability of these assumptions. This is the weak view upon computer simulation as a viable method of inquiry into cognitive processes.

A stronger view is implied when computer simulation is considered to permit the clarification and explanation of properties and processes at the referent level, that is, in the domain of the phenomena of human cognition, and as purported to be explained, represented, generated, or indicated by the theory 'P' in terms of computer experiments with "P". For example, the General Problem Solver, developed by Allen Newell and Herbert Simon in 1957,7 and the subsequent SOAR program,8 have been regarded in this strong manner by the designers. SOAR is a computer program with capacities to adapt itself as it reaches an impasse in its problem-solving: It leaves its ordinary sequence of operations, and sets up a subgoal that may resolve the impasse, before it continues at the previous re-activation point. The successful resolution of the impasse is stored in the SOAR base for future reference in a manner that means that this particular impasse will not re-occur.9

This raises the question of whether a match between simulation systems output of "P" and real-world output of P, given correspondence between inputs to the two systems, can be taken to imply that there is some degree of similarity between operations at the computer simulation level and the human referent level. Those with a strong view upon the possibility of computer simulation revealing the essence of underlying, intermediate structures and processes P at the referent level, may reply in the positive. For example, the physical symbol systems hypothesis, advanced by Newell and Simon as a basis for cognitive simulations and AI, invites such a strong view, since a computational system and the human brain are considered to share the essence of being physical symbol systems. But even when the nature of the systems at operation at the two levels are considered to be related, a more moderate views will recognize that matching outputs of "P" and P in situations with identical inputs can be obtained by the operation of quite different processes of input-output transformations at the two levels.

A strong view upon computational approaches are also sometimes expressed in neurocomputation, to be turned to in the next chapter. Connectionist models are sometimes called "neural networks" to indicate their simulating neurons and their synaptic interconnections in the brain. It shares with AI the concern with a computational approach, and in principle considers the neurophysiological structure of the human brain and an adequately developed computer program to adhere to same basic principles. But unlike AI, this approach is not at the level of a rules-based physical symbol system, with implemented rules and ready-made representation. The emphasis is on neuron-like networks that permits training. To the extent that a so-called neural network implementation is purported to accomplish what the label indicates, it represents a strong view upon the power of computer implementation. First, it is sometimes assumed that the structure and processes of the neurophysiological system at level P in principle can be regarded as being of the same nature as a computer-implemented structure "P" and its executions. Second, it is often assumed that there is a structural, and possibly processual, resemblance between the implemented network "P" and real brain processes P in operation at the referent level. For example, neural net models may "learn" through training to "recognize" features of handwriting and speech,10 and even generate features of talk in a simplified manner. When the implemented net can reach a stable state that generates an output comparable to human output, given initial inputs and the proper training of the implemented network, then, according to the strong view, the implemented structure and executed processes are seen to resemble brain structure and processes.

In the more general context of computer simulation such a strong view has earlier been questioned by Kenneth Mark Colby, a pioneer in computer simulation with reference to psychic processes. Collaborating with Robert Abelson, Roger Schank, and others, he developed a computer simulation model to explore a new theory of paranoia.11K.M. Colby: 'Simulation of Belief Systems', in: R.C. Schank and K.M. Colby (eds.) Computer Models of Thought and Language, San Francisco 1973, pp.251-286. It is expressed as a computer program in form of a dialogue algorithm that permits interviewing in a restricted natural language conversation.12 This opened for the possibility of comparing during clinical interviews the language behavior of patients, and the behavior of the computer program, under similar interview conditions.13 Comparison of ratings of paranoid patients' response and program response showed good resemblance. As pointed out by Colby, however, even when there is resemblance between the behavior of the simulation system, and the behavior of real-world participants in response to similar stimulations, this does not imply that the program statements, "P", model the inner, underlying processes, P, that generate the response in the human participants.

Accounting for the Eliza-effect

Another early computer program explored in the context of a interview, but this time imitating the role played by the interviewer, is the DOCTOR program written almost as a parody by Joseph Weizenbaum. The script for its conversational interface with the user imitates in a superficial way the role played by a Rogerian psychotherapist in an initial interview with a patient. Its crude language analysis program is named "Eliza" (after the flower girl in Pygmalion) to indicate its deficient command of English. Eliza searches for key words from an off-line script to match an incoming string. For example, if the word "mother" occurs as input, the program searches for a match, and return with a question that includes the term MOTHER, or some class-related substitute term such as FATHER or FAMILY. If "time" is mentioned by the user, the program may repeat TIME? or ask why time is important. If no match is found for the incoming string, the program will return with some standard string, such as VERY INTERESTING or PLEASE GO ON.

Weizenbaum (1976:6;189) reports how easily people become involved in such conversations and how they may attribute understanding to the program. He was startled to see how quickly and how very deeply people conversing with DOCTOR became emotionally involved with the computer, and reports that people with little or no knowledge about computers often would demand to be permitted to converse with ELIZA in private. After conversing with it for a time some of them would insist, in spite of his explaining the simple rules-base to them, that they had felt being understood. He calls this a remarkable illusion, and compares it to members of a theatre audience who soon forget that the action they are witnessing is not real. Of interest in the present context is not what such reactions may tell us about this particular program, but what such reactions, and the reactions to these reactions, may tell us about the human mind and about people in conversation, with themselves and with others.

Weizenbaum's report is clearly a report about cases of misplaced attribution of understanding. And yet, when it is referred to as demonstration of how easily people could be fooled, forgetting that they were "conversing" with a not very smart computer program, this may be questioned in view of the present thesis. Did they forget that they were "conversing" with a computer while sitting at a keyboard which they knew linked them to a computer? Can the feeling of being understood in the mind of some of those who "conversed" with ELIZA so easily be labeled and discarded as illusions? And what about their involvement: Did they, as Weizenbaum suggests, become emotionally involved in the computer, or do their activated feelings involve perhaps a radically different domain?

In terms of the virtual other the Eliza Effect may be accounted for in this way: When the user attributes understanding to the computer program, it may be regarded as an indication of the way in which interaction with the computer program has activated the dialogical process in the user, that is, activated his dialoging with himself. In other words, the dialogue with the user's virtual other has been stimulated by the event of being exposed to the computer program. It is not a dialogue between the user and the computer; it is a dialogue occurring in the users' space for dialoging with her virtual other, actualized by the event of exposure. This may also account for the emotional involvement: It is not an emotional involvement in the computer, but an involvement in herself, that is, there is dialoging with her virtual companion in a modus of felt immediacy: She is talking intimately with herself, through her dialoging with her virtual other. Comprised within this self-creative dialogic network of her mind, the computer merely serves as an external means of actualizing this internal dialogue in a modus of felt immediacy with her virtual other, not with the computer as an understanding actual other.

In chapter 5 was accounted for the way in which the infant made use of some object, such as a doll or a blanket, in the process of continuing protodialoging (Compare Fig.4.1 (iii)(iv)). The Eliza-effect may be considered in a analogous manner.

In the absence of any actual companion, the computational object, is transformed into an actualized subject in the virtual companion space of the user, and comprised within the self-creative dialogic network of the user. In terms of this scheme, then, the user's inner dialogue re-creates itself through comprising the computer (interface) as a part of the dyadic network of mind in the space of the user's virtual companion. In other words, the user engages in a conversation with herself through incorporating the computer in the on-going dialogic network of the user's mind. Even when knowing the tricks and limitations of a conversing computer program, users may want to be alone with it, to confide, and may feel that they are being understood by it.14 In terms of the above scheme such a reaction is only to be expected: The programme may have contributed to the activation of the user's dialogue with herself, that is, with her virtual other.

For example, Sherry Turkle reports how she often saw people trying to protect their relationship with the Eliza program by avoiding input statements that would have resulted in nonsense output by the programme.15 This reflects the lack of actual companions and may stem from unsatisfactory relations to actual others. As referred to in chapter 6, Turkle has studied children from age four to fourteen in interaction with computer programs and highly interactive objects that "talk, teach, play, and win". She finds children to discuss interactive features of the computer in psychological terms, rather than physical or mechanical ones. As the world of traditional "objects" seems to serve as a ground for a child's construction of physical things, computers appear to serve as part material for the children's construction of the psychological. This psychological discourse seems to become more pervasive and nuanced with age and sophistication. The more contact children have with computational objects, the more elaborated this psychological language becomes. Distinctions are made, for example, between thinking and feeling. Some of the children define themselves, not in terms of how they may differ from animals, but by how they differ from computers, for example by having feelings; the computer may be smart, but it cannot feel anything. Yet, the children sometimes seem to want their computer to be alive. Turkle recounts how five year old Lucy makes her Speak and Spell programme her constant companion. Lucy works out a special way to make the programme come 'alive'.16

This may be compared to the way in which adults, who fully knew the limitations of the Eliza programme developed by Weizenbaum, and yet confided in it, wanted to be alone with it, even asserted that they felt being understood by it.17 In terms of the thesis developed in this book, such an attribution is only to be expected: The programme may have contributed to the activation of the user's dialogue with herself, that is, with her virtual other. Such reported cases of children and adults conversing with a computer programme may be seen, then, to be reports about children and adults using computational means to reactivate the dialogic circle in themselves.

One observer may see the user in conversation by means of a computer as an external structural relation between a self-enclosed subject and an other-controlled artifact. Another may regard it as two physical (symbol) systems linked by an interface. But such ways of viewing the interaction leaves the reported Eliza-effect as a puzzle. In terms of the present thesis both the experience of being understood, and the feeling of involvement, make sense. In terms of the thesis they may rather be seen as cases where a computational means is made part of a self-creative dialogic network by virtue of the mind's virtual companion. The complementary perspective of the user's virtual companion is projected onto the computer in a way in which makes it a means for the dialogic network of mind to recreate itself. When users informed Weizenbaum "that the machine really understood" it may be accounted for in this way: The computer programme serves as a self-reflective means of activating the internal dialogue with virtual alter. The reported feeling of being understood may be discarded as an illusion by the outside observer, but is still no illusion: A feeling of being understood is a feeling of being understood, in this case, by one's virtual companion.

Views on understanding in Cognitive Science

There are computer programs in current development to which the designers attribute the capacity of understanding. For example, Roger Schank speaks of some of the computer programs, designed in the AI laboratory at Yale, as understanding systems, offering more than just making sense to the user. According to him, a computer program, in order to understand, would have to be able to "carry on a dialogue with itself about what it already knows and what it is in the process of trying to understand.."18

There has been a tendency in the AI tradition to employ terms from human domains to the computational domain. Conversely, there has been a tendency in psychology to employ terms from the computational domains to the human cognitive domains. The former tendency is reflected in the Japanese proposals for "fifth" and "sixth" generation computers. The latter is reflected in textbooks and introductory chapters on cognitive psychology.

Sometimes without qualification, and without quotation marks to suggest metaphoric usage, processes of the mind are referred to in such terms as "retrieval", "top-down processes", "storage", "error signals" and "control systems". This is a fruitful way of stressing operational aspects and suggest powerful analogies with computational aspects. But they may invite confusion with phenomenological and ecological aspects of the very human processes that are purported explained, imitated or approached. The fruits of analogue reasoning may be lost. For example, in physics, Niels Bohr used his knowledge about the solar system to construct his orbit model of the electron. But he would never have dreamed of suggesting that the nucleus be called the "sun" and the electrons the "planets".In his influential book, Cognitive Psychology, Ulrik Neisser,19 for example, adopted the computer metaphor of the mind as an information processing system, but later modified his position as he came to realize how it invited attempts to model consciousness as a stage of processing in a mechanical flow of information.20

In his state of the art report Howard Gardner predicts that cognitive science will have to deal with the various aspects of human nature that hitherto have been bracketed: the role of the surrounding context, cultural and otherwise, and affective aspects of experience.21 The assumed distinction between cognition and feelings may come to be crossed or be considered in terms of cognitive net models, as Johnson-Laird22 is doing. Other leading representatives of cognitive science activate social psychological knowledge about "hot" cognition (Abelson at Yale), or turn to the pragmaticist foundation laid by Peirce in philosophy (Sowa at IBM Systems Research Institute), or to hermeneutics and phenomenology in emphasizing the role of human commitment and breakdown in understanding (Winograd at Stanford), and to the phenomenology of daily life, stressing failures as aspects of remembering (Schank at Yale).

When questions are raised about feelings of like and dislike as properties of belief systems, about failed expectancies as base for reminding, and about understanding failures, it may turn out that replies cannot be given in information processing terms of symbolic representations. Winograd and Flores, for example, turn against the predominant rationalistic and monologic orientation of cognitive science. They refer to Gadamer's hermeneutic and dialogic orientation, and to Pask's conversation theory. They emphasise that the understanding of language requires a foundation that recognizes the closure of nervous systems, for example in terms of autopoiesis, and the thrownness of being in Heidegger's term of Dasein: A person is not an individual ego, but a manifestation of being there in a space of possibilities, situated in a world.23

Thus, in some of the cognitive science milieus in the United States views are being expressed that perhaps the current AI paradigm need be transcended or related to phenomenological accounts of daily-life understanding and self-reflexivity, commitments, and, perhaps, even feelings, as involved in the world in which we live and in which AI programmes are designed to take a part, make sense, and even exhibit that which some term "understanding". For example, while Schank considers the computer programs developed in his laboratory at Yale as capable of exhibiting "cognitive understanding", he regards them as yet far from approaching the kind of empathic understanding that humans sometimes may experience.24

These are questions about inherently human processes which are neither "out there" in the form of designed objects, nor hidden in a private monadic world "without windows", not even when information is defined as in-formation, i.e. formation from within. Information processing and representational media can be destroyed, replaced or departed with, always designed from a given perspective, even when created with a multi-perspective intent. The meaning-processing circles for which they are employed continue as on-going dialogic activity within and between participating minds that are capable of shifting between mediate and immediate understanding, between perspectives that suddenly may focus through conceptual feelings, as distinct from pre-defined viewpoints narrowed by blinkers of reason. The child's question about feelings in the prologue to Roger Penrose's book on "the Emperor's new mind" is pertinent.

The distinguished domain of common sense

There has been a de-emphasis in cognitive science of context and culture, and, until recently, a bracketing of tacit knowledge and issues of feelings. Still, the crossroads involving AI has turned out to bear fruits, albeit mostly in the negative sense of realizing some of the limitations of computational logic. One fruitful lesson learned by the advances and failures of AI research and development concerns the advanced features of human understanding, which unlike our ability for mathematical and logical reasoning, have offered resistance to computational approaches. Our advanced abilities appear to be those of common sense and of partaking in a meaningful manner in the daily life world in which we live. We are able to engage in lifeworlds and understand each other, sometimes, even without any words being uttered at all. By virtue of the fundamental orientation that involves the demand that theories and assumptions be put in computer-operational form fruitful insights of the above kind emerge from the AI and cognitive science endeavors. Not all of them are original findings. For example, Husserl's lifelong activity with phenomenological analysis forced him to conclude that there was something residual which could not be included in the parenthesis of epoche, namely the prerequisite for being able to put anything in parenthesis at all. His discovery of the lifeworld emerged from a consistent practice pursued in a near-operational manner.

Attempts at creating artificial systems capable of developing "understanding" abilities and of "acquiring knowledge" have invited resort not only to phenomenology, but also to basic findings in cognitive and development psychology of such

pioneers as Vygotsky, Piaget and Inhelder, Brown and Bruner

about children's language acquisition, and perhaps even beyond that: To the basic issues raised in chapters 3 and 4 of how infants seem capable of an immediate understanding, unmediated by linguistic symbols later to be acquired by virtue of dialogic circles that seems to allow for a primitive appreciation of other minds.

Cognitive science is now also entering the domain of neurological nets, left behind by AI from its earlier cybernetic grounding. Biocomputation emerges as a complement to symbolic computation. The AI-pioneer, Marvin Minsky, for example, who bears some of the responsibility for the setback in support of neurocomputional network approaches, contributes to bring them in "from the cold", as it were, in his recent work on the society of mind.25 In the following chapter some current neurocomputional efforts will be considered.

E281191 NOTES TO CHAPTER 13

1J. Weizenbaum: Computer Power and Human Reason, W.H. Freeman and Company, San Francisco 1975, pp.6-7, 189.

2Schank....p.166

3G.M.Birthwistle, O.J.Dahl, B.Myhrhaug & K.Nygaard:

4D.A. Norman (ed.): Perspectives on Cognitive Science.

5Sloan Foundation State of the Art Report, 1978, p.6, quoted in H. Gardner: The Mind's New Science, New York: Basic Books 1985, p. 36.

6A. Newell and H. Simon: 'Simulation I: Individual Behavior' in: D.L. Shils (Ed.) International Encyclopedia of the Social Sciences, vol.14, New York, pp.262-268.

7A. Newell and H. Simon: 'GPS: A Program that Simulates Human Thought' in: E.A. Feigenbaum and J. Feldman (Eds.): Computers and Thought, New York 1963, pp.279-296.

8M. Mitchell Waldrop: 'Soar: A Unified Theory of Cognition?' Science, vol. 241 (15 July) 1988, pp.27-29. SOAR stands for State, Operator, And Result, and is developed by Allen Newell in collaboration with John E. Laird and Paul S. Rosenbloom.

9The recorded problem-solving behavior of the program under a given set of specified input values and boundary conditions, may inform about the implications of the implemented theoretical assumptions. It may even permit comparison with recorded human problem solving behavior under well-defined conditions in real-world or laboratory contexts that may resemble the computer-implemented conditions.

10Reference to neural networks...

11K.M. Colby, S. Weber and F.D. Hilf: 'Artificial Paranoia'. Artificial Intelligence 2, 1971, pp.1-25. See also:

12Colby's program generates twists of domains in the answer-reply sequences that appears consistent with paranoid language behavior. During a visit with him at the Stanford Centre for AI when he was in the process of testing it out, he gave me the opportunity of "conversing" with the program. I found that it even replied in well-formed strings to non-sense input strings conforming to English syntax. This I had expected of the program, but would not expect of patients purported to be simulated by it.

13Without being informed that a computer program is involved, a number of interviews were conducted by psychiatrists by means of teletype and a buffer arrangement (so as to remove extra- and para-linguistic differences). Each psychiatrist interviewed two "respondents", one of them the computer program, the other a patient, diagnosed independently as paranoid. A second group of clinicians read transcripts of the interviews, including control interviews with patients without such a diagnosis, and rated each response on a scale of scale of paranoia.

14Joseph Weizenbaum: Computer Power and Human Reason, W.H.Freeman, San Francisco, 1976, p.189.

15S. Turkle, op.cit., p.31.

16Sherry Turkle: The Second Self, Granada, London 1984, pp.41-42.

17Joseph Weizenbaum: Computer Power and Human Reason, W.H.Freeman, San Francisco, 1976, p.189.

18Schank....p.166

19U. Neisser: Cognitive Psychology. New York: Appleton-Century-Crofts 1967.

20U. Neisser: Cognition and Reality. New York: W. H. Freeman and Company 1976.

21H. Gardner: The Mind's New Science, op.cit., p.387.

22P. Johnson-Laird...........emotions.....

23T. Winograd and F. Flores: Understanding Computers and Cognition. Norwood, N.J.: Ablex 1986, p.33.

24His approach has it roots in pioneering works in computer simulation of cognition and language processing begun in the sixties at the Centre for AI at Stanford, where he worked with Kenneth Mark Colby.

25M. Minsky: The Society of Mind. London: Picador 1987.

TO SEE THE INDEX OF TEXTS IN THIS SITE, CLICK HERE